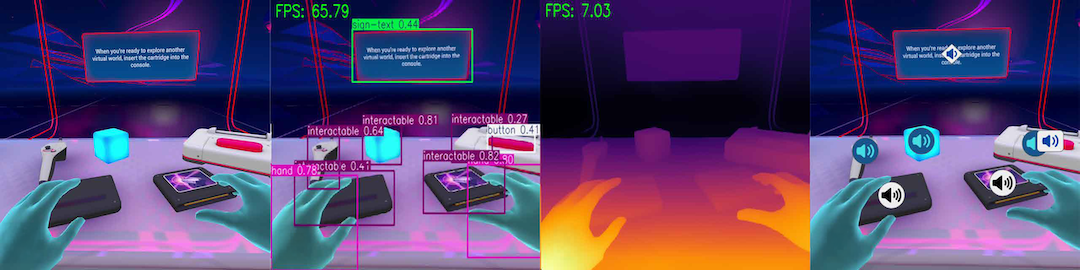

VRSight provides spatial audio feedback for blind and low vision users in virtual reality (VR) environments by leveraging AI systems like real-time object detection, zero-shot depth estimation, and multimodal large language models. VRSight provides real-time audio descriptions and spatial interaction assistance without per-app developer integration, creating the first post hoc “3D screen reading” system for VR.

VRSight: An AI-Driven Scene Description System to Improve Virtual Reality Accessibility for Blind People

Daniel Killough, Justin Feng*, Zheng Xue “ZX” Ching*, Daniel Wang*, Rithvik Dyava*,

Yapeng Tian, Yuhang Zhao

*Authors 2-5 contributed equally to this work.

Presented at UIST 2025 in Busan, Republic of Korea

Click Here to View the Repository on GitHub!

Click Here to Read the Full Paper on ACM DL!